Research

Gestural Control | Instrument and System Design | Embodied Sonic Meditation

Gestural Control in Multimedia Performances

Introduction

The Expressionist is a three dimensional human interface designed to enhance human vocal expression through interactive electronic voice filtering. The system is composed of a two-handed magnetic motion sensing controller and a computer program that processes the recorded voice of the operator based on the performer's body motion. This system is designed for electroacoustic vocal performers who are interested in multimedia live performances and wish to explore the possibilities of using body movement to enrich musical expression.

System Architecture

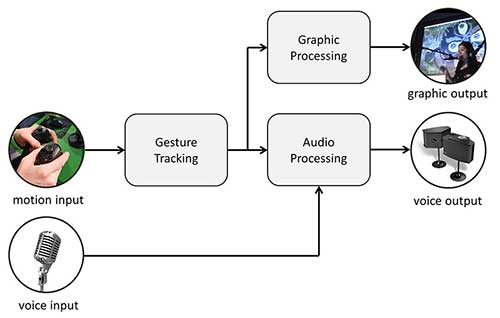

The proposed system is composed of a 3D human motion tracker and microphone that captures the performer's voice and hand gestures. Hand gestures are relayed to a computer to extract absolute position, orientation, velocity and acceleration values. These measurements are then converted into audio events that activate in real-time vocal filters programmed according to the desired musical style. Finally, the resulting audio signal is transmitted to an amplifier for stage performance.

User Interface

The overall user interface of the Expressionist adopts a paired system, with a base station emitting a low energy magnetic field that both hand controllers record to estimate their precise position and orientation. The controllers and the base station each contain three magnetic coils. These coils work in tandem with the amplification circuitry, digital signal processor and positioning algorithm to translate field data into position and orientation data. Because of the real-time nature of the technology, the interface delivers near instant response between the hand motions generated by the operator and the actions commanded by the computer.

Gesture Mapping

Postures are distinguished by first reading the absolute position and orientation of the magnetic hand controllers and then computing their relative position and angular offsets. Once a posture has been correctly identified, a state machine is used to smoothly transition from one filter selection to another. The interpolation reduces the occurrence of acoustic clips that can typically occur during sudden discrete changes. According to the desired effects and hand movements of the performer, different filter functions are used to modify the audio signal. Each filter may alter different parameters of the voice signal. These parameters may include for instance the amplitude, frequency and phases, reverberation, or the spread of the sound in an auditorium. It is clear that the relationship between the gestural variables and the sound effects will vary greatly from the style of performance.

High and Low: A Duo Performance

To evaluate some of the possibilities offered by the system, we conducted an improvisation piece between a vocalist and musician playing the celleto. We selected a divergent gesture mapping strategy, which allowing the vocalist to control parameters of her voice. During the improvisation, the vocalist triggered sound effects and controlled the output of the celleto. A pitch-shift filter was applied to create a second layer of voice to emulate Tibetan throat singing. Sound panning and echoes were controlled in real-time by the hand gestures captured from the vocalist.

Mandala: A live performance with graphic expression

The word “Mandala” comes from Sanskrit, meaning “circle.” - It represents wholeness, and can be seen as a model for the organizational structure of life itself. In Tibet, as part of a spiritual practice, monks create mandalas with colored sand. The creation of a sand mandala may require days or weeks to complete. When finished, the monks gather in a colorful ceremony, chanting in deep tones (Tibetan throat singing) as they sweep their Mandala sand spirally back into nature. This symbolizes the impermanence of life and the world. As part of a musical performance created at Stanford University, an interactive display of a sand Mandala was choreographed with the vocal performance. Near the end of the musical piece, hand motions from the singer were directed to initiate the destruction of the virtual Mandala. Our graphic and dynamic modeling framework was used to simulate in real-time the physical interaction between the captured hand motions of the performer and the small mass particles composing the virtual Mandala.

References

- The Expressionist: A Gestural Mapping Instrument for Voice and Multimedia Enrichment. The International Journal of New Media, Technology, and the Arts, 2015. Vol.10, Issue 1. ISSN: 2326-9987.

- The Virtual Mandala. In proceedings of the 21st International Symposium on Electronic Art (ISEA). Vancouver, Canada.